I had the I had the pleasure of taking part in a discussion over dinner on the topic of Trust when it comes to GeoAI (don’t get me started) at the Royal Society last week, sponsored by InnovateUK as part of their GeoAI Festival. While I think everyone agreed this was very much the “emerging field”, it’s not as if we are as an industry unfamiliar with the concept, in particular when it comes to location privacy.

In an era where our smartphones are essentially extensions of our physical selves, the question of privacy—specifically location privacy—has moved from a niche technical concern to a primary user priority. The psychology of digital trust is key to bridging the gap between providing useful, localised services and respecting the inherent sensitivity of a user’s physical movements – A Digital Fingerprint of unique importance.

The Sensitivity of “Where”

Unlike a username or an email address, location data reveals a person’s life in real-time: their home, their workplace, their hobbies, and even their health habits. Because this data is so personal, the “trust threshold” for location-based services is significantly higher than for other types of digital interaction.

Smartphone users are no longer blindly clicking “Allow.” Instead, they are performing a rapid, often subconscious cost-benefit analysis. They ask themselves: Is the value of this localised content worth the potential risk to my privacy?

To win this internal debate, location platforms and app developers must focus on both transparency and perhaps most vitally immediate relevance.

Signals of Trust

How does a user decide an app is trustworthy? Users are becoming sophisticated in their understanding of locational privacy, perhaps more than we in the industry appreciate. I would argue this understanding is the result of the best practice the industry has largely adopted over the last decade or so.

There are several key “trust signals” that influence user behaviour:

- Visual Cues and Timing: Trust isn’t built through the fine print of an app’s “Terms of Service”; it’s built through interface design. Clear icons, brief notices, and confirmation messages that appear at the moment the data is needed—rather than in a “blanket request” at first launch—help reassure users.

- Plain Language: Technical jargon and “legalese” are trust-killers. Users respond far more positively to short, plain-language explanations that answer the simple question: “Why do you need to know where I am?”

- Neutral Tone: Persuasive or pushy language (“You must enable location for the best experience!”) can trigger a defensive response. In contrast, neutral, explanatory language feels more respectful of the user’s autonomy.

Permission Design

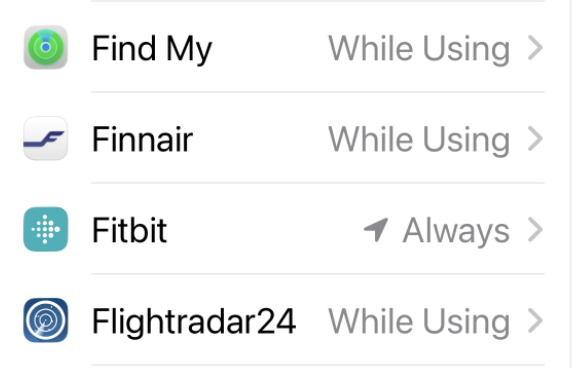

One of the most effective ways to build confidence is by offering granular control. When a user is given the choice to “Allow Once” or “Only While Using the App,” they feel empowered rather than cornered. This shift from all-or-nothing data collection to situational access is a cornerstone of modern privacy standards (like those seen in recent Android and iOS updates) and is essential for maintaining long-term user engagement.

Where the industry could do better is in consistency. If a system repeatedly asks for the same permissions after being denied, or if settings seem to change without user input, trust evaporates. Reliability across sessions creates a “safety net” that encourages users to return.

Transparency and Context Relevance

One of the recurring points in the discussion on Trust in GeoAI was Transparency.

Transparency should not be a one-time event during the onboarding process. Privacy information should be easily accessible at all times, allowing users to review how their data is being used whenever they feel the need. This ongoing transparency transforms a transaction (data for service) into a relationship.

However, even the most transparent app will lose a user’s trust if the content delivered isn’t relevant. There needs to be a balance established between utility and restraint. Over-targeting—showing too much location-specific detail too quickly—can feel “creepy” and invasive. For instance, if an app knows exactly which aisle of a store you are in before you’ve even expressed interest in a product, the relevance is overshadowed by the intrusiveness. The goal is to provide content that aligns with the user’s intent without crossing the line into surveillance.

The marketplaces of both iPhone and Android ecosystems still contain a large selection of Flashlight apps that request the user’s location. There is, of course, no justification for this other than to support local advertising and data gathering – most users recognize this but sometimes “break the glass” because they are somewhere dark without a torch. There is no trust, but sometimes app usage is purely transactional?

Autonomy: Opting In and Out

Trust is deeply linked to the ability to walk away. Systems that make it easy to opt-out of location tracking, or that provide a “private mode” for exploring sensitive topics, demonstrate a respect for the user that pays dividends in brand loyalty.

Whether a user is looking for a local restaurant or navigating more sensitive local listings, they want to know they are in the driver’s seat. Systems that prioritise discretion and allow users to explore options without being forced into a data-sharing agreement are ultimately the ones that will thrive in a privacy-conscious market.

Lessons for GeoAI?

As location-based technology becomes more sophisticated, the “creepy factor” remains the biggest hurdle for developers. Trust is not a static checkbox but a conversation, lasting as long as a user makes use of an app or service. By combining thoughtful permission design, plain-language transparency, and respect for user autonomy, platforms can provide the localized experiences users want without making them feel like they’ve sacrificed their privacy to get them.

Despite location technology having the characteristics of a magic and invisible force, and for most users a system that’s operation is not fully understood, society does now have an understanding and expectation of “how” the system operates both technically and, as importantly, in terms of business models – location sharing is a two-way street!

The same cannot be said for many AI systems based on foundation models; by their nature, they remain “black box” systems. While deriving their capability from training data, how that data is used to respond to a user interaction or prompt is non-deterministic.

Transparency is key in building trust for location technology but may not be enough for GeoAI; explainable GeoAI still needs some work.