The promise of frontier AI models is often limited not by compute power or architectural ingenuity, but by the quality, legality, and sheer accessibility of the data used to train them.

My goto phase for the last few years remains..

The source code for AI is Data !

We’ve spent years in the geospatial and data policy world wrestling with silos and proprietary APIs. Now, the AI industry is finding its own answer to this fragmentation: the Model Context Protocol (MCP).

While much of the recent press focuses on MCP’s role in powering sophisticated AI agents and reducing LLM hallucinations at runtime, the real long-term impact lies further up the chain—in standardising how models acquire the data they need to be built and continuously refined.

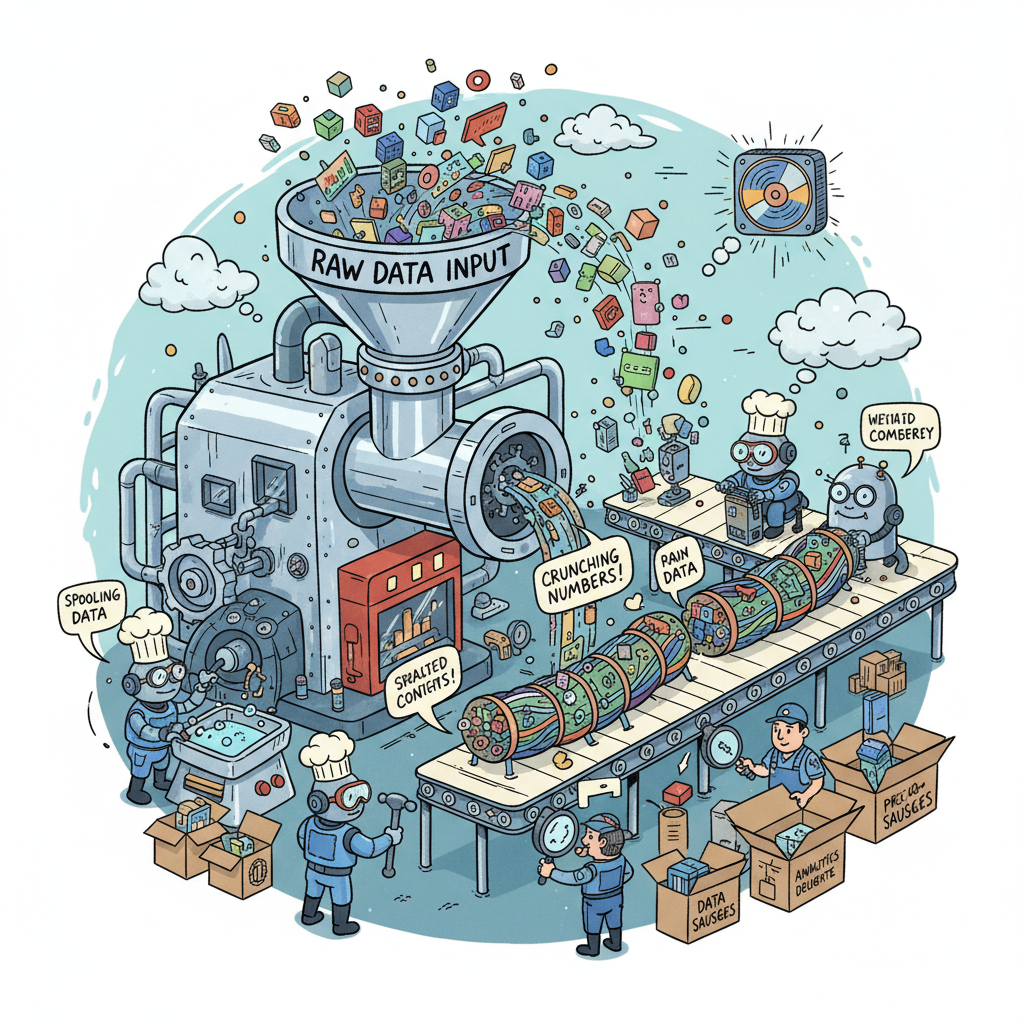

The AI sausage factory..

Historically, training large AI models meant engaging in a bespoke, often messy, pipeline for every new data source. Licensing agreements, ingestion methods, metadata parsing, and normalisation all required custom engineering. Yes I know GIS people have also been dealing with this for decades too !

So the core problem, which MCP solves, is the lack of a universal data contract for AI. Data lives in thousands of disparate silos—internal databases, cloud file systems, proprietary APIs, and data commons. Every integration is a one-off effort

The Model Context Protocol, open-sourced by Anthropic and rapidly adopted across the industry (including by Google and OpenAI), solves this by establishing an open standard for bidirectional communication between an AI model (the client) and external systems (the MCP server)

Think of MCP as the USB-C interface for AI. Instead of creating a custom cable (API connector) for every data source, developers simply need to ensure the data source exposes an MCP server, guaranteeing a standardised interface for discovery, authentication, and structured data delivery

Better training and more ?

While MCP is primarily aimed at training models using Retrieval-Augmented Generation (RAG)—giving models real-time context—its standardisation effects may have profound benefits beyond the lifecycle of creating and maintaining high-quality training datasets, and improve geodata management practices in general.

For example;

- Simplified Data Curation and Continuous Learning.

MCP defines specifications for data ingestion and contextual metadata tagging. For data providers, this means the effort required to make a dataset consumable by AI drops dramatically. This standardisation allows model developers to ingest data from diverse sources (Postgres, GitHub, Google Drive) through a unified mechanism. - Standardized access enforces a clearer chain of custody.

When a model uses an MCP server to access a dataset, the connection is structured and auditable. This aids in creating transparent data cards for trained models, making it easier to trace which specific version of a dataset, exposed via a specific MCP server, contributed to a model’s training, boosting trust and compliance - Access to Niche and Enterprise Data

For specialised models , particularly in domains like geospatial or finance, the most valuable data often sits locked behind firewalls or in niche, non-public systems. By providing a secure, standardized way for enterprises to expose their internal data repositories via an MCP server, the protocol opens up a powerful channel for targeted, permissioned data access necessary for domain-specific model training and custom enterprise LLMs.

And yes this is where I think the real money will be made in AI !

A Case Study from my old life..

The real-world potential of MCP’s standardisation is well demonstrated by facilitating access to complex public datasets. The recent announcement by Google of Data Commons Model Context Protocol (MCP) Server is a great example.

Data Commons aggregates vast, interconnected public statistical information—data on health, economics, demographics, and more—which is often scattered across thousands of disparate silos and organized by complex, technical jargon.Historically, using this data in an AI application required developers to learn and integrate with a complex, proprietary API.

The immediate benefit is that The server allows any MCP-enabled AI agent to consume Data Commons natively using standardized MCP requests, accelerates the creation of data-rich, agentic applications – the current flavour of the month!

Still work to do…

It is still early days for MCP, and we are some way away from universal adoption, and there are still challenges that will need to be addressed..

Introducing a standardized, two-way connection to sensitive data systems, where an autonomous AI agent (the MCP client) can request and act upon data, inherently introduces new security vectors. If not secured with robust authentication and granular authorisation (something MCP aims to support, but which is server-dependent), the protocol could become a standardized way for compromised AI agents to access enterprise databases.

While the protocol has seen rapid initial adoption, the ecosystem is still in its infancy (literally a year old !). There is the risk that a major player could splinter the standard by introducing a non-interoperable alternative, leading to the exact data fragmentation the protocol was designed to solve. Hey that’s never happened right ?

For simple use cases, the MCP architecture (Host, Client, Server, Transport Layer) adds another layer of abstraction between the model and the data. This complexity can introduce overhead in terms of latency, debugging, and infrastructure management compared to direct, custom API calls.

What not How !

The Model Context Protocol is a defining step towards treating AI data access as an infrastructural concern rather than a bespoke engineering project. By standardizing the interface, MCP shifts the focus from how to connect data to what data to connect.

MCP may also serve as a wake up call to Geospatial Data users to rethink how we publish and consume data in a world changed by the requirements to train AI.